Enhancing ReactJS Code Generation with LLMs3 min read

Reading Time: 3 minutesAt Anima, we generate code. It’s what we’ve been doing for the last 6 years. As time passes by, we make the code we generate better and better. But that is so much we plan to do to have the code we generate incorporate more of what we do as front-end developers.

Front-end code has many layers. The first layer is the static code described in HTML and CSS. On top of that, there is responsive behavior. Then there is interactivity, accessibility, and networking. Not to mention localization, SEO, event tracking, and external libraries like FullStory, Sentry, etc.

There are so many things front-end code is responsible for. It would be impossible to have our code generation platform accommodate all of these.

With the introduction of LLMs and generative AI, we can now easily add any type of layer to our pre-generated code.

The challenge with LLMs is that asking them for too many things at once degrades their results exponentially.

In addition, we need to start with something somewhat close to the expected result. For example, although there are some demos of GPT transforming a snapshot of a webpage to HTML and CSS, the actual result is far from the expected one. LLMs are super effective when we ask them to perform tasks with massive amounts of examples of that same task.

Asking an LLM to add a little bit of code to existing code is pretty much straightforward. However, asking it to take a snapshot of a webpage and transform it into a full-blown web app with localization, accessibility, and the whole shebang will likely result in frustration on our side.

How it works

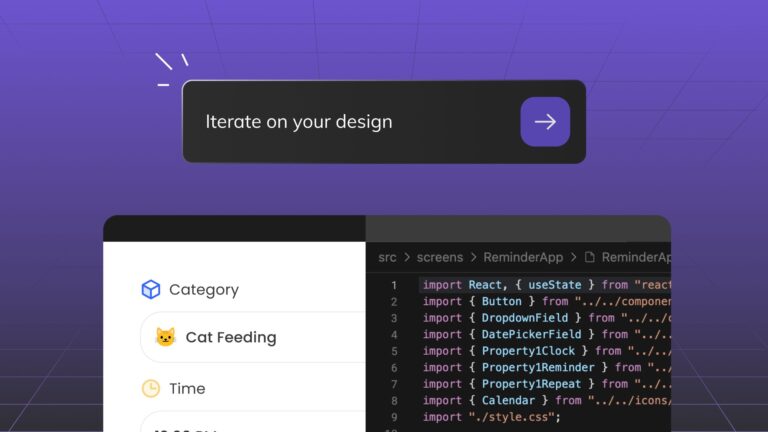

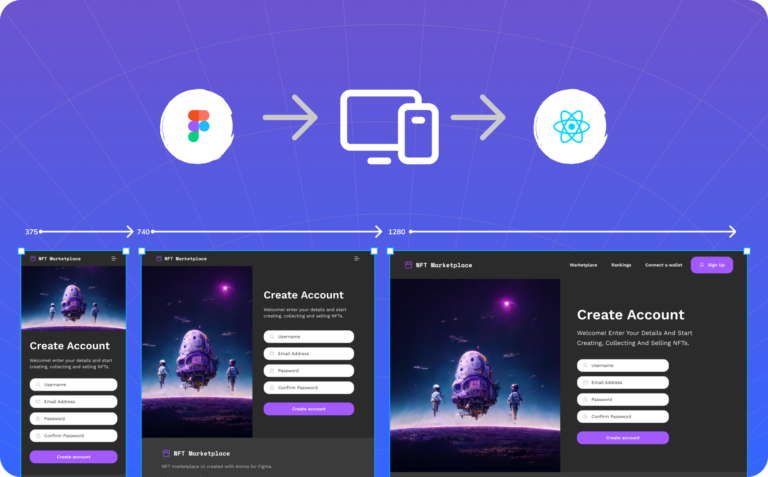

We start with a Figma file and use Anima to export React code. The React code that Anima generates is optimized for high fidelity. That means that running it in the browser should result in a UI that looks identical to the original Figma file.

Now that we have React code, we use an LLM to enhance it by using simple prompts.

Localization

Consider localization as an example. Localization is done by having the copy of web components dynamically presented rather than hard-coded.

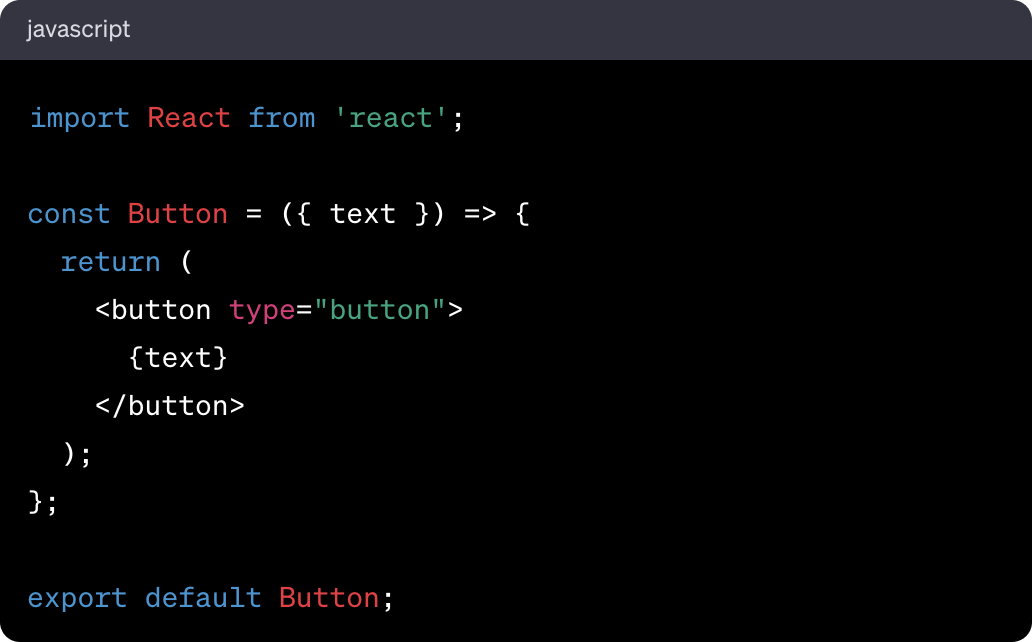

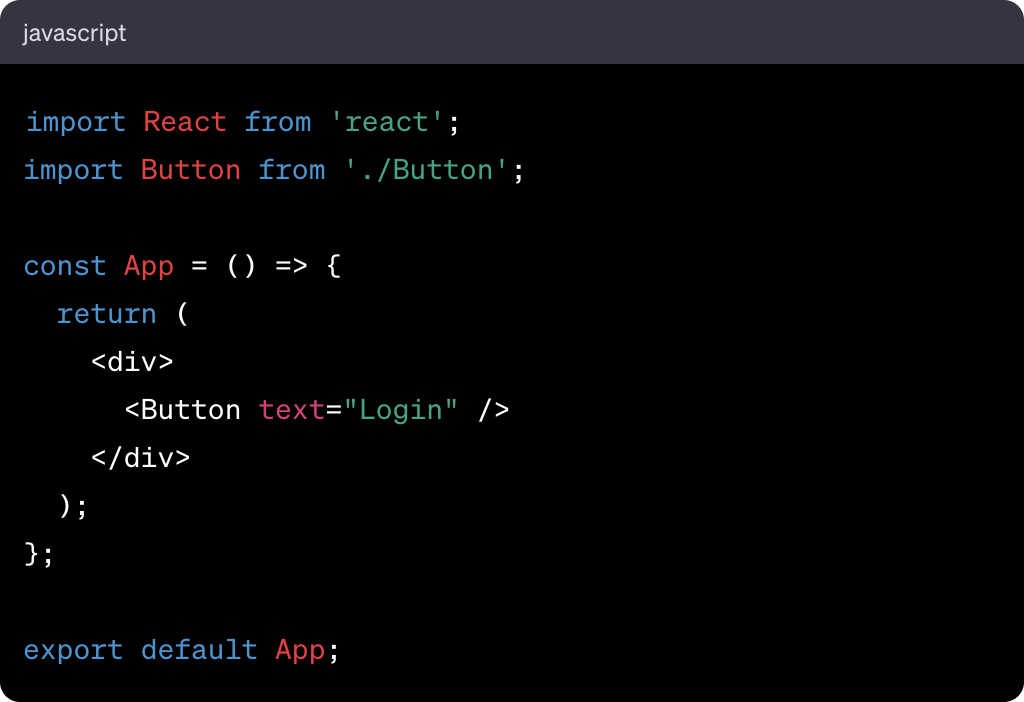

Without localization, a React component might look like this:

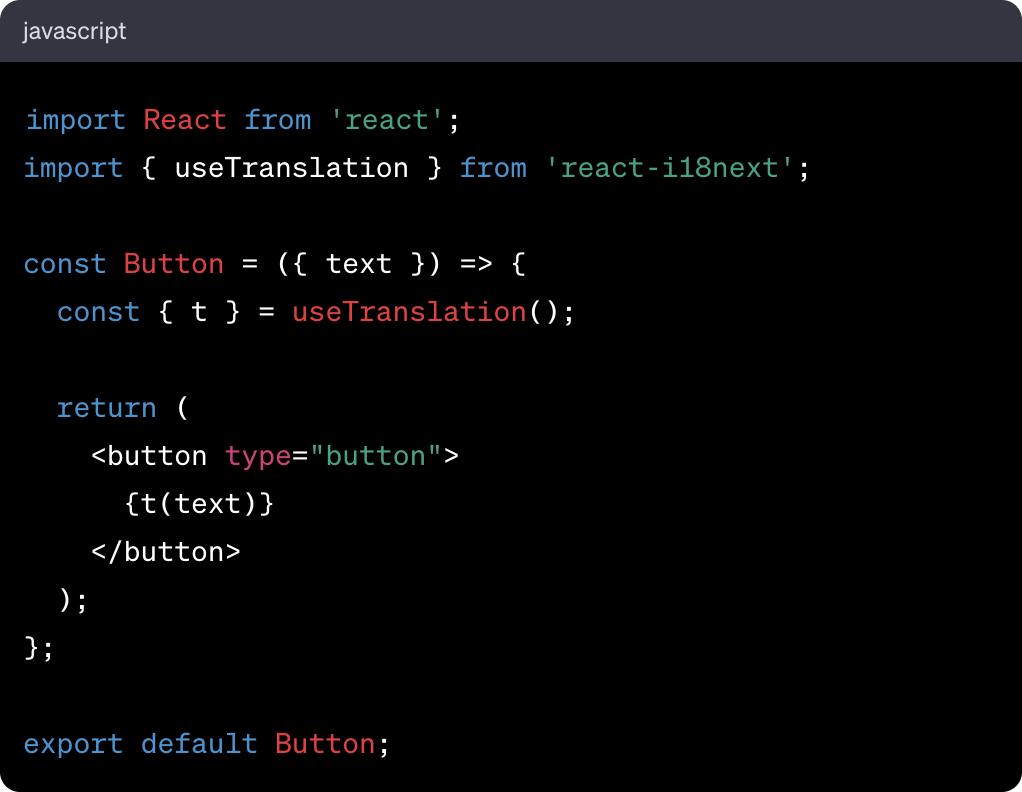

With localization:

This addition of functionality was as simple as using the prompt “add localization to the button”. It’s a simple task for an LLM because there are a lot of examples, tutorials, and documentation out there that the model has been trained on.

Accessibility

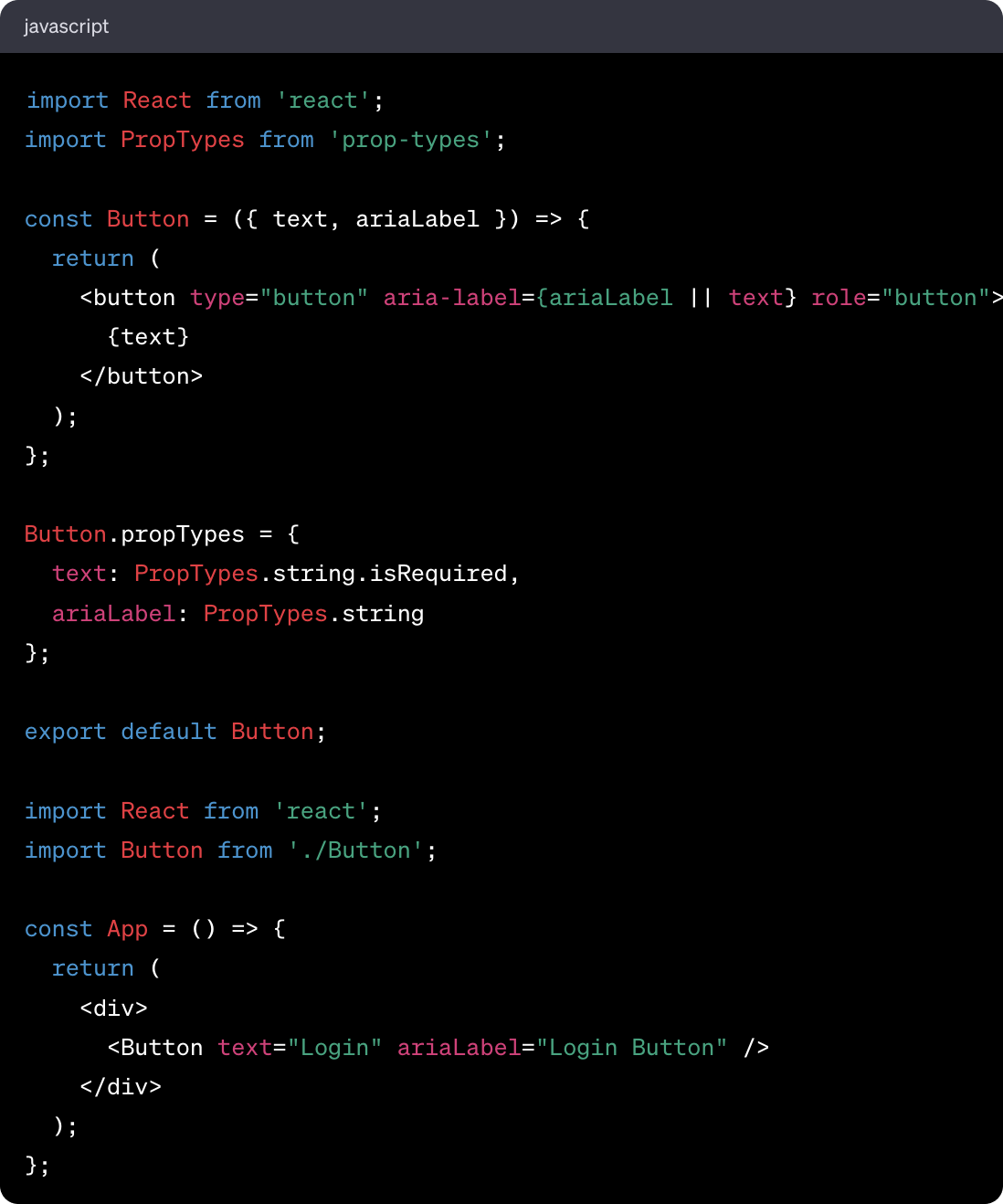

The same button can be enhanced by an LLM for accessibility features:

For a prompt such as “add accessibility features” the result would be:

Tracking

Let’s say we want to track usage of our website or app.

In this example, using the prompt “add event tracking with Google Tag Manager” will result in the following enhancement:

Conclusion

In the examples above, once a Figma design is converted into React code that renders a visually identical web page, it’s easy to leverage the new and exciting technology of Large Language Models (LLMs).

By providing a few prompts, we can make our components rich and elaborate.

Figma

Figma Adobe XD

Adobe XD Sketch

Sketch Blog

Blog