Introducing Scooby: a free regression and fidelity testing tool3 min read

Reading Time: 4 minutesTL;DR

We built a free testing tool that can measure fidelity between different sources. Try it out!

Background

Anima is a platform for converting designs to code, so a big part of our work is ensuring the resulting code renders exactly like the source design. That means running many automated tests, including launching a browser, rendering code, taking a snapshot, and comparing it to the source image.

We were using off-the-shelf products that enabled us to do visual regression tests, but as we added more and more tests, the cost quickly skyrocketed (almost $3,000 per month). That’s when we decided to build a testing framework that would enable us not only to perform regression testing, but fidelity testing as well.

There are plenty of visual regression testing tools, but since we’re building a platform that converts design to code and code to design, we need additional testing functionality. Specifically, we need “fidelity testing.”

Regression testing vs. Fidelity testing

While fidelity and regression tests share some common characteristics, they are designed to answer two very different questions:

- Regression tests compare two different versions of the same dataset. For example, a regression test might verify that the output of a code generator inside a feature branch is not different from a known good state on the

mainbranch. - Fidelity tests compare the same version of two different datasets. For example, a fidelity test might compare how visually similar a given web page is to its Figma design.

We can draw several conclusions from these definitions:

- Regression tests usually produce a binary good/bad result for each test entry, while fidelity tests produce a continuous similarity ratio.

- Regression tests aim to have zero differences between the two datasets, as we are testing the same system at two different points in time.

- Fidelity tests could have differences and still be successful, as having a perfect match between datasets produced by two different systems might not be achievable.

Regression/fidelity visual testing

Every code change (pull request) we make to our code generation engine triggers two test pipelines.

1. Visual regression testing

To conduct visual testing, we capture snapshots of the rendered HTML/CSS, React, or other front-end frameworks we support. These testing artifacts are then compared with our reference (main branch) to identify potential regressions that our changes may have introduced.

Running regression tests is done with a single line of code:

2. Visual fidelity testing

Similar to regression tests, fidelity tests are triggered with the following command:

Scooby uses AWS S3 to store artifacts. Here is a high-level diagram of the Scooby architecture:

The CI Setup

Once all tests pass, Scooby posts the results to the original PR that triggered the tests:

Text-based testing

Scooby does more than just compare two snapshots of rendered web code.

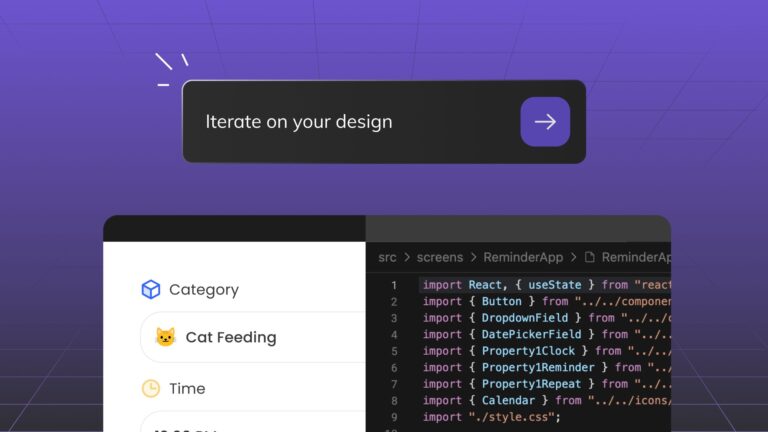

As our platform generates code, we want a seamless way to compare how the code that we generate looks before and after every change we make. That’s why we’ve built Scooby in a generic way that can compare other source types aside from visual ones.

Here is an example of Scooby comparing two ReactJS source code files:

And here is an example of a JSON file comparison:

In a nutshell

We hope you’ll find Scooby useful for your testing purposes. We welcome contributions from the community and look forward to growing the functionality of the project. Try it out today!

Figma

Figma Adobe XD

Adobe XD Blog

Blog